Facebook has been assisting with research into a brain-computer interface for the last several years. This is a type of technological mind-reading, with the long-term goal of speeding and simplifying how humans interact with machines. In other words, sending words and commands to a computer without the need to speak or to move. This technology would be very beneficial to Oculus, the virtual reality headset manufacturer owned by Facebook.

Facebook Reality Labs’ Brain-Computer Interface (BCI) project began in 2017, working toward an augmented reality (AR) and virtual reality (VR) input solution that would eliminate the need for the admittedly awkward typing on a virtual keyboard with controllers or typing in the air with hands. Speaking words and commands is not always convenient or possible depending on the surroundings, so this is a real concern for advancing AR/VR as an alternative to traditional computers. In 2020, Facebook shared some early success in its work with the University of California San Francisco (UCSF), however, the study required electrodes to be implanted in the brain.

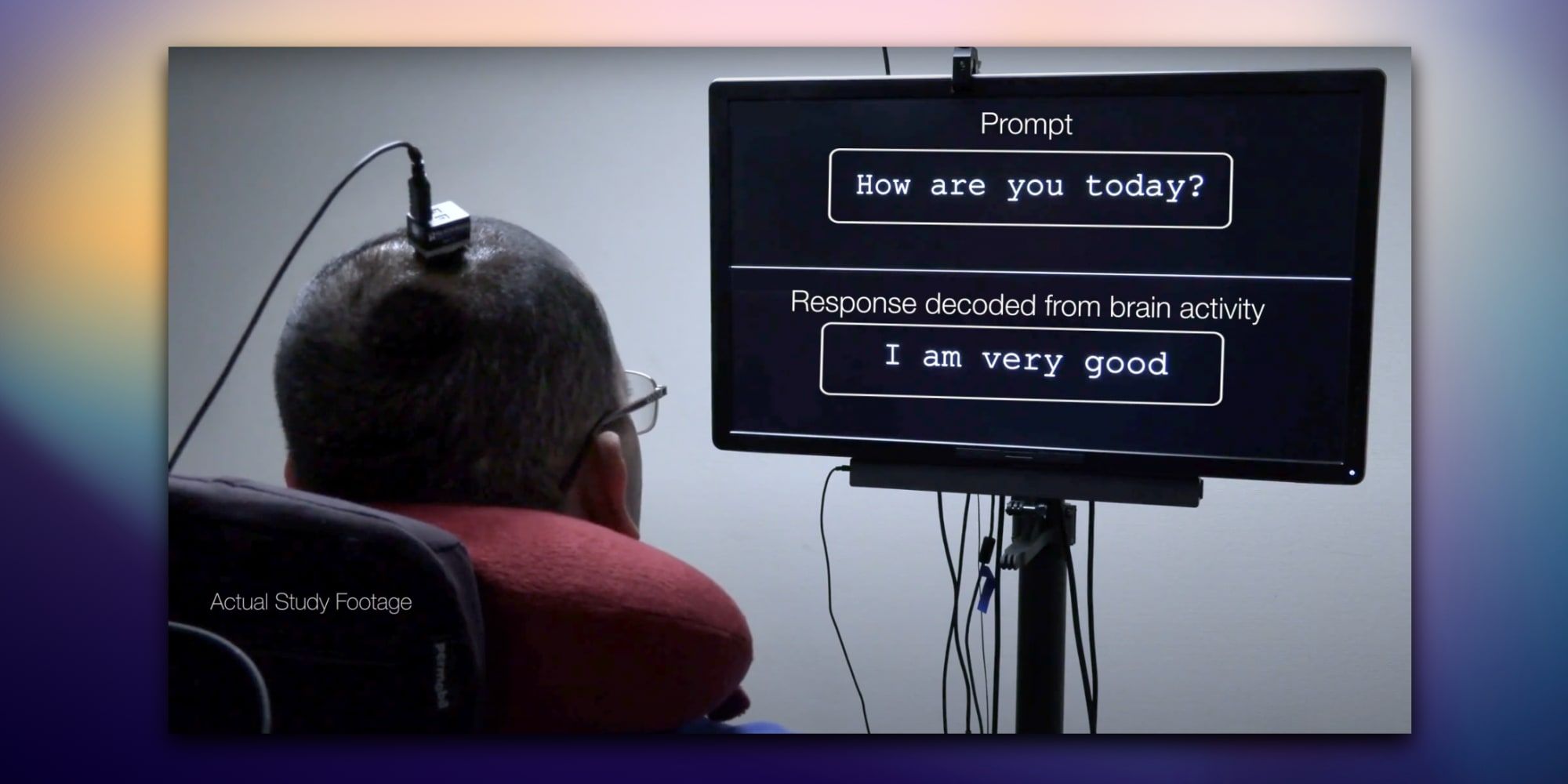

Facebook recently shared an update to its BCI project, celebrating the achievements of the efforts to date. The project has advanced restorative communication for those that have lost the ability to communicate via voice and keyboard. Assistive technologies have made great progress but are often much slower than speech and typing with hands on a keyboard. With Facebook's BCI project, a person that could not articulate speech was able to communicate using a list of 50 words at a rate of over 15 words per minute with the brain interface alone. Facebook has open-sourced its BCI software and will share its head-mounted hardware prototypes with researchers to continue the work. While this project has shown great promise for assistive technology, Facebook stated that it has no interest in developing products that require implantable electrodes, adding that consumer devices to detect brain activity is a long-term goal and that its focus is shifting to wrist-based solutions.

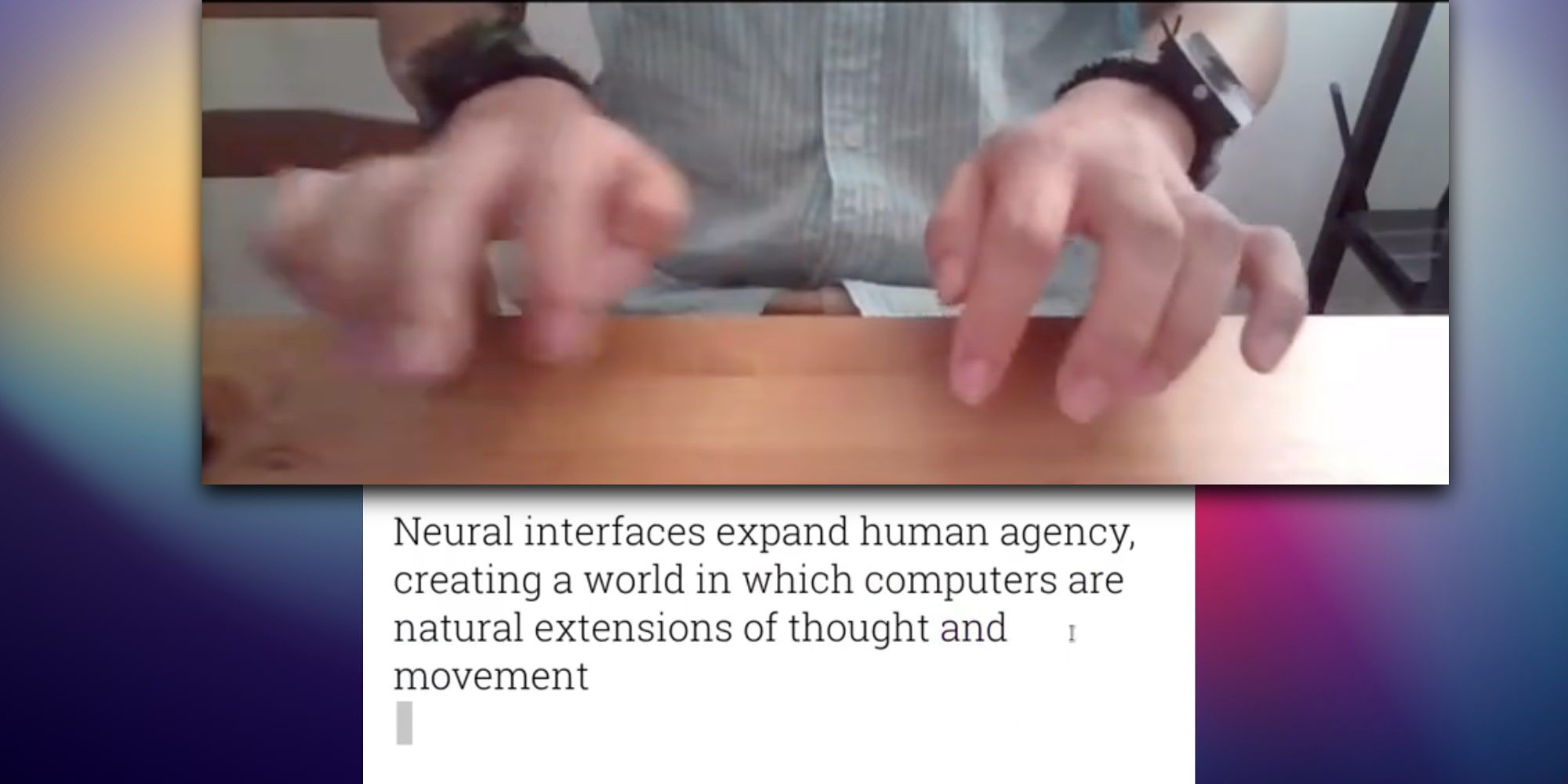

Facebook indicated that electromyography (EMG) would be the new focus of its BCI research, which involves detecting motor neurons in the wrist. Apple recently announced a similar technology with its watchOS 8 update. AssistiveTouch allows controlling an Apple Watch by using finger motions with the hand of the wrist that the watch is worn on. While Apple's technology recognizes only two types of movements, all fingers clasping or thumb and forefinger pinching, Facebook will be working on detecting a much wider range of motions. Tapping and swiping detection is where this work will begin, however, the long-term goal is still to enable high-speed typing or some interface that will allow similar ease of input while in AR and VR.

While the wrist-based controller interface research is just in the early stages, Facebook has shared progress on that project earlier this year, demonstrating a prototype that could detect multiple-finger input, even allowing touch typing on a table as if a keyboard was present with the motion of the fingers and position of the hands being detected by two prototype wrist bands. Facebook's research on brain-computer interfaces helped with assistive technology, but it is shifting to wrist-based interfaces for more near-term use in upcoming AR and VR products.

Source: Facebook

from ScreenRant - Feed https://ift.tt/2Tf27FZ

0 Comments